If only Don Draper, Mad Men’s famed Creative Director, played by Jon Hamm were here in the 21st Century to help with this advertising firm’s failed computer network delivery of time sensitive media. What would he do if suddenly dozens of the firms multi-billion dollar fortune 500 clients couldn’t get their advertising to market? Whatever he’d do it would involve angst, cigarettes, alcohol and a woman other than his wife. If Mad Men keeps playing (almost into the 1970’s) perhaps we will see how Don Draper operates in the digital non-smoking world, ha cough / choke.

Today’s creative teams now depend upon electronic media to be delivered across the world. Don Draper was not prepared for this disaster – but my customer was – they readied media on DVD’s and hard drives and put them on airplanes to get them to markets on time.

Meanwhile the network team gathered to solve the failing technology puzzle. That’s when I got the call to fly in to assist – and it wasn’t my first rodeo as they say. I started analyzing networks to solve complex high visibility problems even before I joined the startup of Network General, the creators of the first application layer network analyzer: The Sniffer.

My involvement in critical problem resolution has taken me into stock markets where zero day security attacks threatened trading, financial institutions, household name manufacturing companies, the Pentagon to restore communications after the events of 911, and more recently the war surges in both Iraq and Afghanistan to optimize biometric applications responsible for sifting through millions of innocent civilians to definitively identify those responsible for terroristic activity. I enjoy the digital network forensics discipline, having trained 50,000 and certified over 3000 of the worlds finest Certified NetAnalysts.

This problem provided all the elements of a good mystery. High visibility, high stakes, interesting characters and a complex set of puzzling symptoms requiring digital analysis tools and skills, but a working knowledge of theory.

The symptoms: High speed transfers were in progress something would cause them to abort. Not all transfers would abort, only some of them. And of those that failed, they would always fail.

Something that repeatedly fails provides opportunity to discover root cause. Definitive root cause analysis is often lost because of knee jerk behaviors hoped to magically solve the problem, providing similar feedback that keeps people pulling slot machine handles. Some reactive measures actually exacerbate problems and lose opportunity to identify root cause – that’s not good as those undiagnosed problems will revisit at the least convenient times. Don Draper despite his vices is a man that delivers – he would demand root cause analysis and effective mitigation by the team.

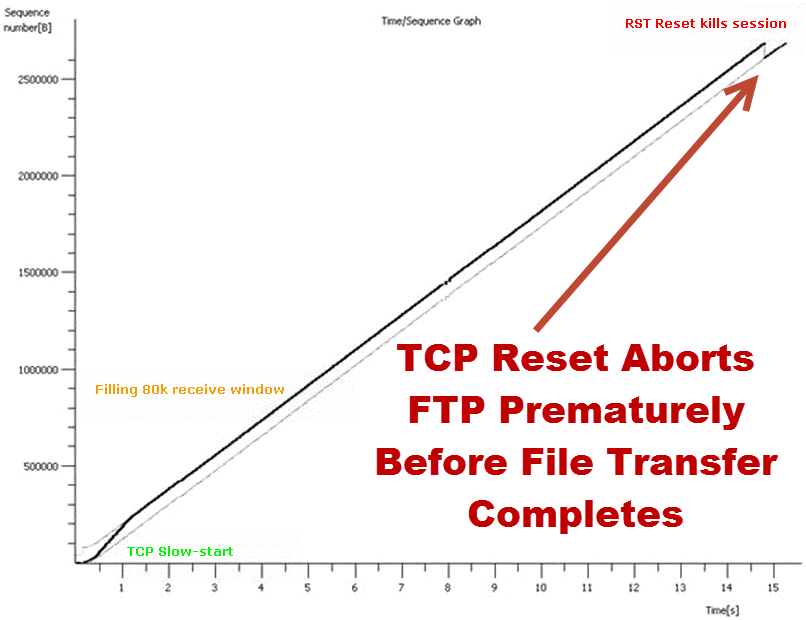

The aborted file transfers between distant locations provided challenge to analyze. How does one after all analyze inside or across the Internet? It’s done by capturing data where possible at one or more controlled test points. Ah, you have no test points? Yeah that’s an issue, you’ve believed the product and service vendor’s bull-spew that you would not have problems, didn’t design test points across your network infrastructure to monitor and analyze problems. Fortunately my customer designed test points inside their firewalls, servers and client machines so we could capture packet trace evidence of the problem. We examined packets at one location finding that TCP/IP’s layer 4 TCP (Transport Control Protocol) responsible for session setup and reliable data delivery was sending a TCP session Reset command before the transfer completed.

A native TCP Reset is sent when a host wants to abruptly abort a session for instance when rebooting a server will graciously Reset all outstanding TCP sessions before it drops offline to reboot. However in this situation there is no reason for such a Reset.

Diagnosing problems is like peeling an onion, each layer reveals part of the story layer by layer determining the next step. I’m amused when naive technology managers think a problem can be solved with one piece of evidence… or that the exact steps can be foretold and planned in advance. That’s an easy way to determine the sophistication of a technologist or technology manager. Imagine having a complex medical condition and expecting your physician to in advance outline in detail each step and the outcome in advance of running tests? Or how about an attorney before a case goes to trial? In technical troubleshooting it is the same… you rely on the reputation and previous results of the practitioner more than anything else asking: can this expert manage technology, people and resources to a successful result? Enterprise network applications are important to the life and productivity of an organization, choose your practitioners wisely.

Wondering why a TCP Reset was sent, it was noticed data collected independently at another location indicated that the Reset was initiated by the other end – what? How could that be? In fact evidence on both ends indicated the TCP Reset was initiated by the other! Impossible, yes, but why might this occur?

By now you may be thinking that it may have been initiated by a MITM (man-in-the-middle) and in this case that might not mean a nefarious MITM, but our own internal MITM. Perhaps a security device such as a Firewall is detecting suspicious activity and sending out a TCP Reset to stop the activity? No, they thought it had to be a “bad guy”‘ as our stuff would never do such a thing because we did not configure that kind of behavior to occur.

So to track down this interloper, took a look at TCP/IP’s network layer IP and its TTL (Time To Live) field oft referred to as router Hop Count to corroborate congruent number of router hops between the two nodes. Normal packet exchanges between the two nodes verified the same number of router hops. Oh, hold on a minute, the TCP Reset Control exchanges have differing Hop Counts in both directions! What have we here? An incongruence for sure. Has the MITM exposed itself by maintaining it’s own hop count rather than surreptitiously using a congruent TTL of the other node? Yes, the MITM used not only it’s own TTL but also TCP/IP’s IP Fragment ID, a field that uniquely numbers data segments sent from a node.

Detailed knowledge of theory from practical analysis of complex problems, teaching and authoring the Certified NetAnalyst Network Forensic Professional Program providing advanced TCP/IP protocol theory and a 3D like practical understanding really pays dividends on this one…. So what does all this illustrate?

That a device somewhere in the path is actually inserting the TCP Reset simultaneously as it were making the two nodes believe the other wanted to Reset the TCP connection. It also revealed how many router Hops away from each end the MITM was located allowing step by step backtracking to the responsible device, a Linux IPTABLES Firewall. And that indeed this was not an interloper surreptitiously and purposely aborting the mission critical transfer mid stream.

By this time the technologist responsible for the security architecture was negotiating the ledge of the roof of the company headquarters from which to jump feeling responsible for the company’s scramble to deliver media around the nation through alternate means. Negotiating him off the ledge required answers to this devices behavior.

No admission from the internals of the Linux IPTABLES Firewall application or platform could be found. Every log, verbose and otherwise, nor advanced debug levels indicated that the device was responsible.

I’d been onsite for two days, and the entire core IT team for two straight weeks sequestered to solve the problem. Reminds me of the recent Mad Men episode where Chevy wanted new concepts for their ad campaign by Monday so the firm had a doctor come in to inject the creative team for a 72 hour marathon weekend session. Don Draper and the team produced nothing from the drugs but it made for interesting behavior of the meth induced Mad Men episode.

Our team alternately was productive by quickly replacing the open source firewall with a Cisco PIX provided by the local representative. In the interest of root cause analysis it was found that it was the behavior of the Linux kernel by inserting a clean install of a plain vanilla version on another hardware platform without IPTABLES installed caused the same behavior. It was not important at this point to the customer to pay for digging any deeper into the Linux problem as the commercial alternative was acceptable, in place and operating successfully.

The Security manager felt his way off the ledge accepting that the open source product he bet the company on was to blame despite his best efforts to configure it well and save the company the cost of commercial firewalls. He now manages Linux IPTABLES Firewalls at your ISP.

Mad Men’s Don Draper would be proud, sign bonus checks and allow a wild office party – none of which was done by our conservative customer so we’ll just be happy writing this story of our successful experience.